3D crop imaging quickly identifies high-yielding, stress-resistant plants

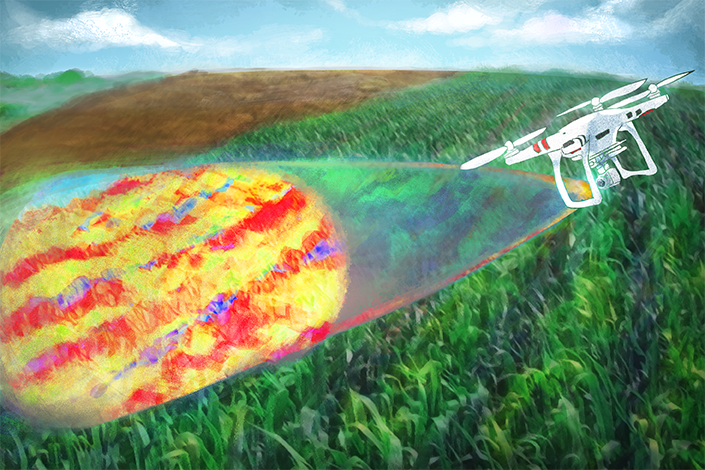

Image: Research Communications and Public Relations

Building three-dimensional point clouds from high-resolution photos taken from unmanned aerial vehicles or drones may soon help plant breeders and agronomists save time and money compared with measuring crops manually.

Lonesome Malambo, postdoctoral research associate in the Texas A&M University ecosystem science and management department in College Station, recently published on this subject in the International Journal of Applied Earth Observation and Geoinformation.

He was joined on the study by Texas A&M AgriLife Research scientists Sorin Popescu, Seth Murray and Bill Rooney, their graduate students and others within the Texas A&M University System. Funding was provided by AgriLife Research, the U.S. Department of Agriculture National Institute of Food and Agriculture, Texas Corn Producers Board and United Sorghum Checkoff Program.

“What this multidisciplinary partnership has developed is transformative to corn and sorghum research, not just to replace our standard labor intensive height measurements, but to find new ways to measure how different varieties respond to stress at different times through the growing season,” Murray said. “This will help plant breeders identify higher yielding, more stress-resistant plants faster than ever possible before.”

Crop researchers and breeders need two types of data when determining what crop improvement selections to make: genetic and phenotypic, which are the physical characteristics of the plant, Malambo said.

Great strides have been made in genetics, he said, but there’s still much work to be done in measuring the physical traits of any crop in a timely and efficient manner. Currently, most measurements are taken from the ground by walking through fields and measuring.

Over the past few years, UAV photos have been tested to see what role they can play in helping determine characteristics such as plant height, which, measured over time, can help assess the influence of environmental conditions on plant performance.

Malambo said this study could be the first to use the concept of generating 3-D point clouds using “structure from motion,” or SfM, techniques over corn and sorghum throughout a growing season. These two crops were selected because they have a large variation in height and canopy over the season.

While SfM is not new, the technology has been historically under-evaluated for repeated plant height estimation in studies limited to a single date or short UAV campaigns, he said.

In agricultural environments where conditions change due to crop maturity, Malambo said the next logical step was to determine if the methods were consistent, repeatable and accurate over the growth cycle of crops.

He said the SfM technology uses overlapping images to reconstruct the 3-D view of a scene, going beyond the typical flat photos by enabling automated interior and exterior orientation calibration. Small reference targets were placed in fields before each flight.

When a photo is taken from the UAV, it is basically transferring a 3-D scene into 2-D, Malambo explained. SfM is trying to reverse this process utilizing properties such as geometry, properties of light and modeling.

“Once we recreate the scene, it looks the way it did when we captured it, multidimensional,” he said.